LiteLLM

LiteLLM gives you a unified API to access multiple LLMs (100+ LLMs)

Running LiteLLM

Pull the docker image:

bash

docker pull ghcr.io/berriai/litellm:main-latestCreate a config file in current directory or somewhere else litellm_config.yaml:

yaml

model_list:

- model_name: gpt-3.5-turbo

litellm_params:

model: azure/my_azure_deployment

api_base: os.environ/AZURE_API_BASE

api_key: "os.environ/AZURE_API_KEY"

api_version: "2024-07-01-preview"Starting the server:

bash

# replace PATH/TO with the path to your config file

docker run \

-v PATH/TO/litellm_config.yaml:/app/config.yaml \

-e AZURE_API_KEY=d6*********** \

-e AZURE_API_BASE=https://openai-***********/ \

-p 4000:4000 \

ghcr.io/berriai/litellm:main-latest \

--config /app/config.yaml --detailed_debug

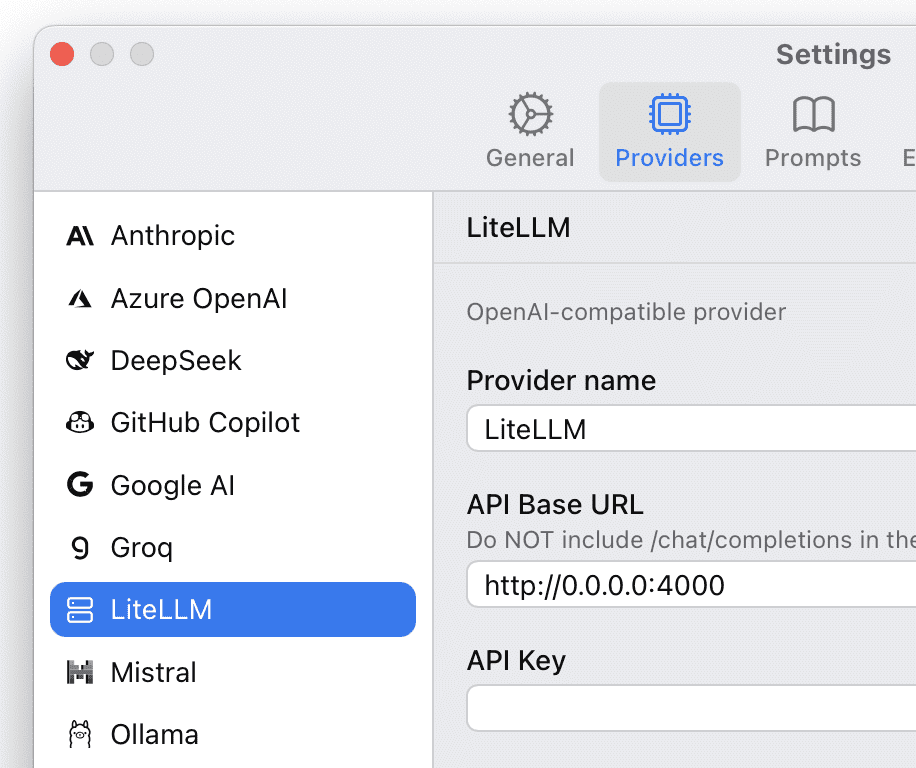

# RUNNING on http://0.0.0.0:4000Finally create an OpenAI-compatible provider on ChatWise, and enter the URL: